The global coronavirus pandemic has triggered worldwide panic as the numbers of victims explode and economies implode, as physical movement and social interactions wither in lockdowns, as apocalyptic projections of its destructive reach soar, and as unprepared or underprepared national governments and international agencies desperately scramble for solutions.

The pandemic has exposed the daunting deficiencies of public health systems in many countries. It threatens cataclysmic economic wreckage as entire industries, global supply chains, and stock markets collapse under its frightfully unpredictable trajectory. Its social, emotional, and mental toll are as punishing as they are paralysing for multitudes of people increasingly isolated in their homes as the public life of work spaces, travel, entertainment, sports, religious congregations, and other gatherings grind to a halt.

Also being torn asunder are cynical ideological certainties and the political fortunes of national leaders as demands grow for strong and competent governments. The populist revolt against science and experts has received its comeuppance as the deadly costs of pandering to mass ignorance mount. At the same time, the pandemic has shattered the strutting assurance of masters of the universe as they either catch the virus or as it constrains their jet-setting lives and erodes their bulging equity portfolios.

Furthermore, the coronavirus throws into sharp relief the interlocked embrace of globalisation and nationalism, as the pandemic leaps across the world showing no respect for national boundaries, and countries seek to contain it by fortifying national borders. It underscores the limits of both neo-liberal globalisation that has reigned supreme since the 1980s, and populist nationalisms that have bestrode the world since the 2000s, which emerged partly out of the deepening social and economic inequalities spawned by the former.

These are some of the issues I would like to reflect on in this essay, the political economy of the coronavirus pandemic. As historians and social scientists know all too well, any major crisis is always multifaceted in its causes, courses, and consequences. Disease epidemics are no different. In short, understanding the epidemiological dimensions and dynamics of the coronavirus pandemic is as important as analysing its economic, social, and political impact. Moments of crisis always have their fear-mongers and skeptics. The role of progressive public intellectuals is to provide sober analysis.

In the Shadows of 1918-1920

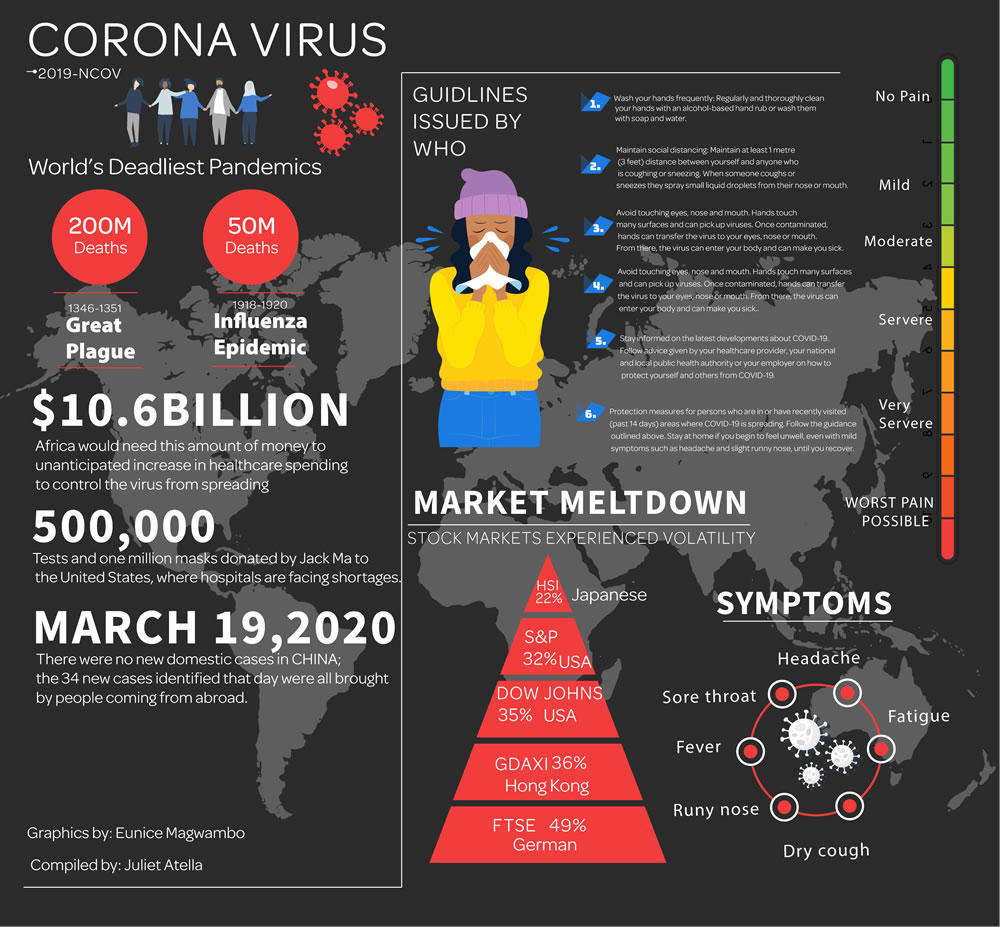

The coronavirus pandemic is the latest and potentially one of the most lethal global pandemics in a long time. One of the world’s deadliest pandemics was the Great Plague of 1346-1351 which ravaged larges parts of Eurasia and Africa. It killed between 75 to 200 million people, and wiped out 30 per cent to 60 per cent of the European population. The plague was caused by fleas carried by rats, underscoring humanity’s vulnerability to the lethal power of small and micro-organisms, notwithstanding the conceit of its mastery over nature. The current pandemic shows that this remains true despite all the technological advances humanity has made since then.

Over a century ago, as World War I came to an end, an influenza epidemic, triggered by a virus transmitted from animals to humans, ravaged the globe. One-third of the world’s population was infected, and it left 50 million people dead. It was the worst pandemic of the 20th century. It was bigger and more lethal than the HIV/AIDS epidemic of the late 20th century. But for a world then traumatised by the horrors of war it seemed to have left a limited impact on global consciousness.

Some health experts fear Covid-19, as the new strain of coronavirus has been named, might rival the influenza epidemic of 1918. But there are those who caution that history is sometimes not kind to moral panics, that similar hysteria was expressed following the outbreaks in the 2000s and 2010s of bouts of bird flu and swine flu, of SARS, MERS and Ebola, each of which was initially projected to kill millions of people. Of course, nobody really knows whether or not the coronavirus pandemic of 2020 will rival that of the influenza pandemic of 1918-1920, but the echoes are unsettling: its mortality rate seems comparable, as is its explosive spread.

The devastating power Covid-19 is wracking and humbling every country, economy, society, and social class, although the pervasive structural and social inscriptions of differentiation still cast their formidable and discriminatory capacities for prevention and survival. In its socioeconomic and political impact alone, Covid-19 has already made history. One lesson from the influenza pandemic that applies to the current coronavirus pandemic is that countries, cities and communities that took early preventive measures fared much better than those that did not.

Doctors’ Orders

Since Covid-19 broke out in Wuhan, China, in late December 2019, international and national health organisations and ministries have issued prevention guidelines for individuals and institutions. Most of the recommended measures reflect guidelines issued by the World Health Organization.

But the pandemic is not just about physical health. It is also about mental health. Writing in The Atlantic magazine of March 17, 2020, on how to stay sane during the pandemic one psychotherapist notes, “You can let anxiety consume you, or you can feel the fear and also find joy in ordinary life, even now”. She concludes, “I recommend that all of us pay as much attention to protecting our emotional health as we do to guarding our physical health. A virus can invade our bodies, but we get to decide whether we let it invade our minds”.

A Kenyan psychology professor advises her readers in the Sunday Nation of March 23, 2020, to cultivate a positive mindset. “Take only credible sources of information . . . Don’t consume too much data, it can be overwhelming. You may be in isolation but very noisy within yourself. Learn to relax and to convert your energy into other activities in order to nurture your own mental health . . . Such as gardening, learning a language, doing an online course, painting or read that book. Do the house chores, trim the flowers, paint, do repairs, clean the dust in those corners we always ignore . . . Exercise . . . Talk to someone if you feel terrified, empty, hopeless, and worthless. These are creeping signs of depression. This too will pass: Believe me that there will be an end to this”.

Scramble for Containment

Many governments were caught unprepared or underprepared by the coronavirus pandemic. Some even initially dismissed the threat. This was particularly the case among populist rightwing governments, such as the administrations of President Trump of the United States, Prime Minister Johnson of the United Kingdom, and President Bolsonaro of Brazil. As populists, they had risen to power on a dangerous brew of nationalist and nativist fantasies of reviving national greatness and purity, xenophobia against foreigners, and manufactured hatred for elites and experts.

To rightwing ideologues the coronavirus was a foreign pathogen, a “Chinese virus” according to President Trump and his Republican followers in the United States, that posed no threat to the nation quarantined in its splendid isolation of renewed greatness. Its purported threat was fake news propagated by partisan Democrats, or disgruntled left-wing labour and liberal parties in the case of the United Kingdom and Brazil that had recently been vanquished at the polls.

Such was the obduracy of President Trump that not only did he and his team ignore frantic media reports about the pandemic leaping across the world, but also ominous, classified warnings issued by the U.S. intelligence agencies throughout January and February. Instead, he kept assuring Americans in his deranged twitterstorms that there was little to worry about, that “I think it’s going to work out fine,” that “The Coronavirus is very much under control in the USA”.

Trump’s denialism was echoed by many leaders around the world including in Africa. This delayed taking much-needed preemptive action that would have limited the spread and potential impact of the coronavirus firestorm. In fact, as early as 2012 a report by the Rand Corporation warned that only pandemics were “capable of destroying America’s way of life”. The Obama administration proceeded to establish the National Security Council directorate for global health and security and bio-defense, which the Trump administration closed in 2018. On the whole, global pandemics have generally not been taken seriously by security establishments in many countries preoccupied with conventional wars, terrorism, and the machismo of military hardware.

In the meantime, China, the original epicenter of the pandemic took draconian measures that locked down Wuhan and neighbouring regions, a measure that was initially dismissed by many politicians and pundits in “western democracies” as a frightful and an unacceptable example of Chinese authoritarianism. As the pandemic ravished Italy, which became the coronavirus epicenter in Europe and a major exporter of the disease to several African countries, regional and national lockdowns were embraced as a strategy of containment.

Asian democracies such as South Korea, Japan, Taiwan, and Singapore adopted less coercive and more transparent measures. Already endowed with good public health systems capable of handling major epidemics—which capability was enhanced by the virus epidemics of the 2000s and 2010s—they developed effective and vigilant monitoring systems encompassing early intervention, meticulous contact tracking, mandatory quarantines, social distancing, and border controls.

Various forms of lockdown, some more draconian than others, were soon adopted in many countries and cities around the world. They encompassed the closure of offices, schools and universities, and entertainment and sports venues, as well as banning of international flights and even domestic travel. Large-scale disinfection drives were also increasingly undertaken. The Economist of March 21, 2020 notes in its lead story that China and South Korea have effectively used “technology to administer quarantines and social distancing. China is using apps to certify who is clear of the disease and who is not. Both it and South Korea are using big data and social media to trace infections, alert people of hotspots and round contacts”.

Belatedly, as the pandemic flared in their countries, the skeptics began singing a different tune, although a dwindling minority complained of overreaction. Befitting the grandiosity of populist politicians, they suddenly fancied themselves as great generals in the most ferocious war in a generation. Some commentators found the metaphor of war obscene for its self-aggrandisement for clueless leaders anxious to burnish their tattered reputations and accrue more gravitas and power. For the bombastic, narcissistic, and pathological liar that he is, President Trump sought to change the narrative that he had foreseen the pandemic notwithstanding his earlier dismissals of its seriousness.

His British counterpart, Prime Minister Johnson vainly tried Churchillian impersonation which was met with widespread derision in the media. Each time either of them spoke trying to reassure the public, the more it became clear they were out of their depth, that they did not have the intellectual and political capacity to calm the situation. It was a verdict delivered with painful cruelty by the stock markets that they adore—they fell sharply each time the two gave a press conference and announced half-baked containment measures.

Initially, many of Africa’s inept governments remained blasé about the pandemic even allowing flights to and from China, Italy and other countries with heavy infection rates. Cynical citizens with little trust in their corrupt governments to manage a serious crisis sought comfort in myths peddled on social media about Africa’s immunity because of its sunny weather, the curative potential of some concoctions from disinfectants to pepper soup, the preventive potential of shaving beards, or the protective power of faith and prayer.

But as concerns and outrage from civil society mounted, and opportunities for foreign aid rose, some governments went into rhetorical overdrive that engendered more panic than reassurance. It has increasingly become evident that Africa needs unflinching commitment and massive resources to stem the rising tide of coronavirus infections. According to one commentator in The Sunday Nation of March 22, “It is estimated that the continent would need up to $10.6 billion in unanticipated increases in health spending to curtail the virus from spreading”. He advises the continent to urgently implement the African Continental Free Trade Area, and work with global partners.

Cynical citizens with little trust in their corrupt governments to manage a serious crisis sought comfort in myths peddled on social media

In Kenya, some defiant politicians refused to self-quarantine after coming from coronavirus-stricken countries, churches resisted closing their doors, and traders defied orders to close markets. This forced the government to issue draconian containing measures on March 22, 2020 stipulating that all those who violated quarantine measures would be forcefully quarantined at their own expense, all gatherings at churches, mosques were suspended, weddings were no longer allowed, and funerals would be restricted to 15 family members.

The infodemic of false and misleading information, as the WHO calls it, was of course not confined to Africa. It spread like wildfire around the world. So did coronavirus fraudsters peddling fake information and products to desperate and unwary recipients. In Britain, the National Fraud Intelligence Bureau was forced to issue urgent scam warnings against emails and text messages purporting to be from reputable research and health organisations.

The coronavirus pandemic showed up the fecklessness of some political leaders and the incompetence of many governments. The neo-liberal crusade against “big government” that had triumphed since the turn of the 1980s, suddenly looked threadbare. And so did the populist zealotry against experts and expertise. The valorisation of the politics of gut feelings masquerading as gifted insight and knowledge, suddenly vanished into puffs of ignoble ignorance that endangered the lives of millions of people. People found more solace in the calm pronouncements of professional experts including doctors, epidemiologists, researchers and health officials than loquacious politicians.

Populist leaders like President Trump and Prime Minister Johnson and many others of their ilk had taken vicarious pleasure in denigrating experts and expert knowledge, and decimating national research infrastructures and institutions. Suddenly, at their press conferences they were flanked by trusted medical and scientific professionals and civil servants as they sought to bask in the latter’s reassuring glow. But that could not restore public health infrastructures overnight, severely damaged as they were by indefensible austerity measures and the pro-rich transfers of wealth adopted by their governments.

Economic Meltdown

When the coronavirus pandemic broke out, many countries were unprepared for it. There were severe shortages of testing kits and health care facilities. Many also lacked universal entitlement to healthcare, social safety nets including basic employment rights and unemployment insurance that could mitigate some of the worst effects of the pandemic’s economic impact. All this ensured that the pandemic would unleash mutually reinforcing health and economic crises.

The signs of economic meltdown escalated around the world. Stock markets experienced a volatility that run out of superlatives. In the United States, from early February to March 20, 2020 the Dow Jones Industrial Average fell by about 10,000 points or 35%, while the S&P fell by 32%. In Britain, the FTSE fell by 49% from its peak in earlier in the year, the German GDAXI by 36%, the Hong Kong HSI by 22%, and the Japanese Nikkei by 32%. Trillions of dollars were wiped out. In the United States, the gains made under President Trump vanished and fell to the levels left by his nemesis President Obama, depriving the market-obsessed president of one of his favourite talking points and justifications for re-election.

There are hardly any parallels to a pandemic leading to markets crumbling the way they have following the coronavirus outbreak. They did not do so during the 1918-1920 influenza pandemic, although they fluctuated thereafter. Closer to our times, during the flu pandemic of 1957-1958 the Dow fell about 25per cent, while the SARS and MERS scares of the early 21st century had relatively limited economic impact. Some economic historians warn, however, that the stock market isn’t always a good indicator or predictor of the severity of a pandemic.

The sharp plunge in stock markets reflected a severe economic downturn brought about by the coronavirus pandemic as one industry after another went into a tailspin. The travel, hospitality and leisure industries encompassing airlines, hotels, restaurants, bars, sports, conventions, exhibitions, tourism, and retail were the first to feel the headwinds of the economic slump as people escaped or were coerced into the isolation of their homes. For example, hotel revenues in the United States plummeted by 75 per cent on average, worse than during the Great Recession and the aftermath of the 9/11 terrorist attacks combined.

In the United States, the gains made under President Trump vanished and fell to the levels left by his nemesis President Obama

Other industries soon followed suit as supply chains were scuppered, profits and share prices fell, and offices closed and staff were told to work from home. Manufacturing, construction, and banking have not been spared. Big technology manufacturing has also been affected by factory shutdowns and postponing the launch of new products. Neither was the oil industry safe. With global demand falling, and the price war between Saudi Arabia and Russia escalating, oil prices fell dramatically to $20.3, a fall of 67 per cent since the beginning of 2020. Some predicted the prospect of $5 oil per barrel.

The oil price war threatened to decimate smaller or poorer oil producers from the Gulf states to Nigeria. It also threatened the shale oil industry in the United States because of its high production costs, thereby depriving the country of its newly acquired status as the largest oil producer in the world, to the chagrin of Russia and OPEC. Many of the US shale oil companies face bankruptcy as their production costs are fourteen times higher than Saudi Arabia’s production costs, and they need prices of more than $40 per barrel to cover their direct costs.

Falling oil prices combined with growing concerns about climate change, dented the prospects of several oil exploration and production companies, such as the British company Tullow, which has ambitious projects in Kenya, Uganda, and Ghana. This threatened these countries’ aspirations to join the club of major oil-producing nations. In early March, 2020, one of Tullow’s major investors, Blackrock, the world’s biggest hedge fund with $7 trillion, made it clear it was losing interest in fossil fuel investment.

Such are the disruptions caused by the coronavirus pandemic that 51 per cent of economists polled by the London School of Economics believe “the world faces a major recession, even if COVID-19 kills no more people than seasonal flu. Only 5% said they did not think it would.” According to a survey reported by the World Economic Forum, “The public sees coronavirus as a greater threat to the economy than to their health, new research suggests. Economic rescue measures announced by governments do not appear to be calming concern . . . The majority of people in most countries polled expect to feel a personal financial impact from the coronavirus pandemic, according to the results. Respondents in Vietnam, China, India and Italy show the greatest concern”.

51 per cent of economists polled by the London School of Economics believe the world faces a major recession

Many economies spiraled into recession. The major international financial institutions and development agencies have revised world, regional, and national economic growth prospects for 2020 downwards, sometimes sharply so. Estimates by Frost & Sullivan, a consultancy firm, show that world GDP which grew by 3.5% in 2018 and 2.9% in 2019, will slide to 1.7% if the coronavirus pandemic becomes prolonged and severe, and it might take up to a year or more for the world economy to recover. The OECD predicts that “Global growth could drop to 1.5 per cent in 2020, half the rate projected before the virus outbreak. Recovery much more gradual through 2021”.

The OECD Economic Outlook, Interim Report March 2020 notes,

Growth was weak but stabilising until the coronavirus Covid-19 hit. Restrictions on movement of people, goods and services, and containment measures such as factory closures have cut manufacturing and domestic demand sharply in China. The impact on the rest of the world through business travel and tourism, supply chains, commodities and lower confidence is growing.

It forecasts “Severe, short-lived downturn in China, where GDP growth falls below 5% in 2020 after 6.1% in 2019, but recovering to 6.4% in 2021. In Japan, Korea, Australia, growth also hit hard then gradual recovery. Impact less severe in other economies but still hit by drop in confidence and supply chain disruption”.

Compared to a year earlier, the once buoyant Chinese economy shrank by between 10 and 20 per cent in January and February 2020. The Economist states,

In the first two months of 2020 all major indicators were deeply negative: industrial production fell by 13.5% year-on-year, retail sales by 20.5% and fixed-asset investment by 24.5% . . . The last time China reported an economic contraction was more than four decades ago, at the end of the Cultural Revolution.

In the United States, the recovery and boom from the Great Recession that started in 2009 came to a screeching halt. Some grim predictions project that as businesses shut down and more than 80 million Americans stay penned at home unemployment, which had dropped to a historic low of 3.5 per cent, might skyrocket to 20 per cent. This spells disaster as consumer spending drives 70 per cent of the economy, and 39 per cent of Americans cannot handle an unexpected $400 expense.

This economic bloodletting removes the second boastful pillar of President Trump’s re-election strategy, the robust health of the US economy

Various estimates indicate that in the next three months the economy will shrink by anywhere between 14 and to 30 per cent, ushering in one of America’s fastest and deepest recessions in history. This economic bloodletting removes the second boastful pillar of President Trump’s re-election strategy, the robust health of the US economy.

UNCTAD has added its gloomy assessment for the world economy and emerging economies. Launching its report in early March, the Director of the Division on Globalisation and Development Strategies at UNCTAD noted that,

One ‘Doomsday scenario’ in which the world economy grew at only 0.5 per cent, would involve ‘a $2 trillion hit’ to gross domestic product . . . There’s a degree of anxiety now that’s well beyond the health scares which are very serious and concerning . . . To counter these fears, ‘Governments need to spend at this point in time to prevent the kind of meltdown that could be even more damaging than the one that is likely to take place over the course of the year’, Mr. Kozul-Wright insisted.

Turning to Europe and the Eurozone, Mr. Kozul-Wright noted that its economy had already been performing ‘extremely badly towards the end of 2019’ . . . It was ‘almost certain to go into recession over the coming months; and the German economy is particularly fragile, but the Italian economy and other parts of the European periphery are also facing very serious stresses right now as a consequence of trends over (the last few) days’.

The UNCTAD announcement continues,

So-called Least Developed Countries, whose economies are driven by the sale of raw materials, will not be spared either. ‘Heavily-indebted developing countries, particularly commodity exporters, face a particular threat’, thanks to weaker export returns linked to a stronger US dollar, Mr. Kozul-Wright maintained. ‘The likelihood of a stronger dollar as investors seek safe-havens for their money, and the almost certain rise in commodity prices as the global economy slows down, means that commodity exporters are particularly vulnerable’.

Africa will not be spared. According to Fitch Solutions, a consultancy firm,

We have revised down our Sub-Saharan Africa (SSA) growth forecast to 1.9% in 2020, from 2.1% previously, reflecting macroeconomic risks arising from moderating oil prices and the global spread of Covid-19. While the number of confirmed Covid-19 cases in SSA remains low thus far, African markets remain vulnerable to deteriorating risk sentiment, tightening financial conditions and slowing growth in key trade partners. The sharp decline in global oil prices resulting from the failure of OPEC+ to reach agreement on additional production cutbacks will undermine growth and export earnings in the continent’s main oil producers, notably Nigeria, Angola and South Sudan.

In Kenya, there were widespread fears that the coronavirus pandemic would bring the national airline carrier and other companies in the lucrative tourism industry to their knees. Similarly affected will be the critical agricultural and horticultural export industry. Aggravating the sharp economic downturns, some commentators lamented, is widespread corruption. Domestically, the ubiquitous matatu transport industry is groaning under new regulations limiting the number of passengers.

The economy was already fragile prior to the coronavirus crisis. In the words of one commentator in the Sunday Standard of March 23, 2020,

Companies were laying off, malls were already empty even before the outbreak and shops and kiosks and mama mbogas were recording the lowest sales in years. Matters are not helped by the fact that our e-commerce (purchase and delivery) does not account for much due to poor infrastructure and low trust levels.

Another commentator in the same paper on March 17, 2020 wrote, “It’s a matter of time before bleeding economy goes into coma”. He outlined the depressing litany: increased cost of living, gutting of Kenya’s export market, discouragement of the use of hard cash, producers grappling with limited supply, a bleeding stock market, irrational investor fears, and moratorium on foreign travel.

As the crisis intensified, international financial institutions and development agencies loosened the spigots of financial support. On March 12, 2020 the IMF announced,

In the event of a severe downturn triggered by the coronavirus, we estimate the Fund could provide up to US$50 billion in emergency financing to fund emerging and developing countries’ initial response. Low-income countries could benefit from about US$10 billion of this amount, largely on concessional terms. Beyond the immediate emergency, members can also request a new loan—drawing on the IMF’s war chest of around US$1 trillion in quota and borrowed resources—and current borrowers can top up their ongoing lending arrangements.

For its part, the World Bank announced on March 17 that,

The World Bank and IFC’s Boards of Directors approved today an increased $14 billion package of fast-track financing to assist companies and countries in their efforts to prevent, detect and respond to the rapid spread of COVID-19. The package will strengthen national systems for public health preparedness, including for disease containment, diagnosis, and treatment, and support the private sector.

On March 19, the European Central Bank announced,

As a result, the ECB’s Governing Council announced on Wednesday a new Pandemic Emergency Purchase Programme with an envelope of €750 billion until the end of the year, in addition to the €120 billion we decided on 12 March. Together this amounts to 7.3% of euro area GDP. The programme is temporary and designed to address the unprecedented situation our monetary union is facing. It is available to all jurisdictions and will remain in place until we assess that the coronavirus crisis phase is over.

Altogether, The Economist states,

A crude estimate for America, Germany, Britain, France and Italy, including spending pledges, tax cuts, central bank cash injections and loan guarantees, amounts to $7.4trn, or 23% of GDP . . . A huge array of policies is on offer, from holidays on mortgage payments to bail-outs of Paris cafés. Meanwhile, orthodox stimulus tools may not work well. Interest rates in the rich world are near zero, depriving central bank of their main lever . . . What to do? An economic plan needs to target two groups: households and companies.

Some of the regional development banks also announced major infusions of funds to contain the pandemic. On March 18, “The Asian Development Bank (ADB) today announced a $6.5 billion initial package to address the immediate needs of its developing member countries (DMCs) as they respond to the novel coronavirus (COVID-19) pandemic”.

On the same day, the African Development Bank announced “bold measures to curb coronavirus”, but this largely consisted of “health and safety measures to help prevent the spread of the coronavirus in countries where it has a presence, including its headquarters in Abidjan. The measures include telecommuting, video conferencing in lieu of physical meetings, the suspension of visits to Bank buildings, and the cancellation of all travel, meetings, and conferences, until further notice”. No actual financial support was stipulated in the announcement.

Trading Ideological Places

As the economic impact of the coronavirus pandemic escalated, demands for government support intensified from employers, employees and trade unions. The pandemic is wreaking particular havoc among poor workers who can hardly manage in “normal” times. As noted above, across Kenya jobs were already being lost before the coronavirus epidemic. Those in the informal economy are exceptionally vulnerable because of the extensive lockdown the government announced on March 22, 2020.

Those earning a precarious living in the gig economy face special hurdles in making themselves heard and receiving support. With the lockdown of cities, couriers become even more essential to deliver food and other supplies, but they lack employment rights, so that many cannot afford self-isolation if they become sick. Customer service workers at airports and in supermarkets have sometimes been at the receiving end of pandemonium and the anxieties of irate customers.

The pandemic is wreaking particular havoc among poor workers who can hardly manage in “normal” times

The pandemic has helped bring political perspective to national and international preoccupations that suddenly look petty in hindsight. For example, as one author puts it in a story in The Atlantic of March 11, 2020, “It’s not hard to feel like the coronavirus has exposed the utter smallness of Brexit . . . Ultimately, Brexit is not a matter of life and death literally or economically. The coronavirus, meanwhile, is killing people and perhaps many businesses”.

The same could be said of many trivial political squabbles in other countries. In the United States, one observer notes in The Atlantic of March 19, 2020,

In the absence of meaningful national leadership, Americans across the country are making their own decisions for our collective well-being. You’re seeing it in small stores deciding on their own to close; you’re seeing it in restaurants evolving without government decree to offer curbside pickup or offer delivery for the first time; you’re seeing it in the offices that closed long before official guidance arrived.

The author concludes poignantly, “The most isolating thing most of us have ever done is, ironically, almost surely the most collective experience we’ve ever had in our lifetimes”. And I can attest that I have seen this spirit of cooperation and collaboration on my own campus, among faculty, staff, and students. But the pandemic also raises questions about how effectively democracy can be upheld under the coronavirus lockdowns. Might desperate despots in some countries try to use the crisis to postpone elections?

Also upended by the coronavirus pandemic are traditional ideological polarities. Right-wing governments are competing with left-wing governments or opposition liberal legislatures as in the United States to craft “big government” mitigation packages. Many are borrowing monetary and fiscal measures from the Great Recession playbook, some of which they resisted when they were in opposition or not yet in office.

In terms of monetary policy, several central banks have cut interest rates. On March 15, 2020, the US Federal Reserve cut the rate to near zero in a coordinated move with the central banks of Japan, Australia and New Zealand. The Fed also announced measures to shore up financial markets including a package of $700 billion for asset purchase and a credit facility for commercial banks. Three days later, as noted above, the European Central Bank launched a €750 billion Pandemic Emergency Purchase Programme. These measures failed to assure the markets which continued to plummet.

The pandemic has helped bring political perspective to national and international preoccupations that suddenly look petty in hindsight

As for fiscal policy, several governments announced radical spending measures. On 20 March, the UK announced that the government would pay up to 80 per cent of the wages of employees across the country sent home as businesses shut their doors as part of the drastic coronavirus containment strategy. This followed the example of the Danish government that had earlier pledged to cover 75 per cent of employees’ salaries for firms that agreed not to cut staff.

In the United States, Congress began working on a $1 trillion economic relief programme, later raised to $1.8 trillion. The negotiations between the two parties over the proposed stimulus bill proved bitterly contentious. For President Trump and Republicans it was a bitter pill to swallow, given their antipathy to “big government”. It marked the fall of another ideological pillar of Trumpism and Republicanism. For some, the demise of these pillars marks the end of the Trump presidency, which has been exposed for its deadly incompetence, autocratic political culture, and aversion to truth and transparency. We will of course only know for sure in November 2020.

Might desperate despots in some countries try to use the crisis to postpone elections?

In Kenya employers, workers, unions and analysts have implored the government to undertake drastic measures to boost the economy by providing bailouts, tax incentives and rebates, and social safety nets, as well as increasing government spending. Demands have been made to banks to extend credit to the private sector and to the Central Bank to lower or even freeze interest rates for six months. The Sunday Nation of March 22 reported pay cuts were looming for workers as firms struggled to keep afloat, and that the government had scrambled a war chest of Sh140 billion to shore up the economy and avert a recession.

Home Alone

Home isolation is recommended by epidemiologists as a critical means of what they call flattening the curve of the pandemic. Its economic impact is well understood, less so its psychological and emotional impact. While imperative, social isolation might exacerbate the growing loneliness epidemic as some call it, especially in the developed countries.

According to an article in The Atlantic magazine of March 10, 2020, the loneliness epidemic is becoming a serious health care crisis.

Research has shown that loneliness and social isolation can be as damaging to physical health as smoking 15 cigarettes a day. A lack of social relationships is an enormous risk factor for death, increasing the likelihood of mortality by 26 percent. A major study found that, when compared with people with weak social ties, people who enjoyed meaningful relationships were 50 percent more likely to survive over time.

The problem of loneliness is often thought to be prevalent among older people, but in countries such as the United States, Japan, Australia, New Zealand, and the United Kingdom, “The problem is especially acute among young adults ages 18 to 22”. Research shows that the feeling of loneliness is not a reflection of physical isolation, but of the meaning and depth of one’s social engagements. Among the Millennial and Gen Z generations loneliness is exacerbated by social media.

Several studies have pointed out that social media may be reinforcing social disconnection, which is at the root of loneliness. This is because while social media has facilitated instant communication and made people more densely connected than ever, it offers a poor substitute for the intimate communication and dense and meaningful interactions humans crave and get from real friends and family. It fosters shallow and superficial connections, surrogate and even fake friendships, and narcissistic and exhibitionist sociability.

Loneliness should of course not be confused with solitude. Loneliness can also not be attributed solely to external conditions as it is often rooted in one’s psychological state. But the density and quality of social interactions matters. The current loneliness epidemic reflects the irony of a vicious cycle, a nexus of triple impulses: in cultures and sensibilities of self-absorption and self-invention, some people invite or choose loneliness either as a marker of self-sufficiency or social success, while the Internet makes it possible for people to be lonely, and lonely people tend to be more attracted to the Internet.

Among the Millennial and Gen Z generations loneliness is exacerbated by social media

But technology can also help mitigate social distancing. To quote one author writing in The Atlantic on March 14, 2020, “As more people employers and schools encourage people to stay home, people across the country find themselves video-chatting more than they usually might: going to meetings on Zoom, catching up with clients on Skype, FaceTime with therapists, even hosting virtual bar mitzvahs”. Jointly playing video games, watching streaming entertainment, or having virtual dinner parties also opens bonding opportunities.

Besides the growth and consumption of modern media and its disruptive and isolating technologies, loneliness is being reinforced by structural forces including the spread of the nuclear family, an invention that even in the United States has a short history as a social formation. This is evident in sociological studies and demonstrated in the lead story in the March 2020 edition of The Atlantic.

The article shows that for much of American history people lived in extended clans and families, whose great strength was their resilience and their role as a socialising force. The decline of multigenerational families dates to the development of an industrial economy and reached its apogee after World War II between 1950 and 1975, when it all began falling apart, again due to broader structural forces.

One doesn’t have to agree with the author’s analysis of what led to the profound changes in family structure. Certainly, women did not benefit from the older extended family structures, which were resolutely patriarchal. But it is a fact that currently, more people live alone in the United States—and in many other countries including those in the developing world—than ever before. The author stresses, “The period when the nuclear family flourished was not normal. It was a freakish historical moment when all of society conspired to obscure its essential fragility”.

He continues, “For many people, the era of the nuclear family has been a catastrophe. All forms of inequality are cruel, but family inequality may be the cruelest. It damages the heart”. He urges society “to figure out better ways to live together”. The question is: what will be the impact of the social distancing demanded by the coronavirus pandemic on the loneliness epidemic and the prospects of developing new and more fulfilling ways of living together?

Coronavirus Hegemonic Rivalries

At the beginning of the coronavirus outbreak, China bore the brunt of being both the victims and the victimised. The rest of the world feared the contagion’s spread from China and before long the disease did spread to other Asian countries such as South Korea, Taiwan, Singapore, and Iran. This triggered anti-Chinese and anti-Asian racism in Europe, North America, and even Africa.

For many Africans, it was a source of perverse relief that the coronavirus had not originated on the continent. Many wondered how Africa and Africans would have been portrayed and treated given the long history, in the western and global imaginaries, of pathologising African cultures, societies, and bodies as diseased embodiments of sub-humanity.

Disease breeds xenophobia, the irrational fear of the “other”. Commenting on the influenza pandemic in The Wall Street Journal, one scholar reminds us, “As the flu spread in 1918, many communities found scapegoats. Chileans blamed the poor, Senegalese blamed Brazilians, Brazilians blamed the Germans, Iranians blamed the British, and so on”. One key lesson is that to combat pandemics global cooperation is essential. Unfortunately, that lesson seems to be ignored by some governments in the current pandemic, although like in other pandemics, good Samaritans also abound.

For many Africans, it was a source perverse relief that the coronavirus had not originated on the continent

As China, South Korea, and Japan gradually contained the spread of the disease, and Italy and other European countries turned into its epicenter, and as the contagion began surging in the United States, the tables turned. While the Asian democracies largely managed to contain the coronavirus through less coercive and more transparent ways, it is China that took centre-stage in the global narrative. As would be expected in a world of intense hegemonic rivalries between the United States and China, the coronavirus pandemic has become weaponised in the two countries’ superpower rivalry.

On March 19, 2020, China marked a milestone since the outbreak of the coronavirus when it was announced that there were no new domestic cases; the 34 new cases identified that day were all brought in by people coming from abroad. An article in the New York Times of March 19, 2020, reports,

Across Asia, travellers from Europe and the United States are being barred or forced into quarantine. Gyms, private clinics and restaurants in Hong Kong warn them to stay away. Even Chinese parents who proudly sent their children to study in New York or London are now mailing them masks and sanitizer or rushing them home on flights that can cost $25,000.

The Asian democracies largely managed to contain the coronavirus through less coercive and more transparent ways

Even before this turning point, as coronavirus cases in China declined, the country began projecting itself as a heroic model of containment. It anxiously sought to furbish its once battered image by exporting medical equipment, experts, and other forms of humanitarian assistance. Such is the new-found conceit of China that, to Trump’s racist casting of the “China virus” some misguided Chinese nationalists falsely charge that the coronavirus started with American troops, and scornfully disparage the United States for its apparently slow and chaotic containment efforts.

Another article in The New York Times of March 18, 2020, captures China’s strategy for recasting its global image.

From Japan to Iraq, Spain to Peru, it has provided or pledged humanitarian assistance in the form of donations or medical expertise — an aid blitz that is giving China the chance to reposition itself not as the authoritarian incubator of a pandemic but as a responsible global leader at a moment of worldwide crisis. In doing so, it has stepped into a role that the West once dominated in times of natural disaster or public health emergency, and that President Trump has increasingly ceded in his ‘America First’ retreat from international engagement.

The story continues,

Now, the global failures in confronting the pandemic from Europe to the United States have given the Chinese leadership a platform to prove its model works — and potentially gain some lasting geopolitical currency. As it has done in the past, the Chinese state is using its extensive tools and deep pockets to build partnerships around the world, relying on trade, investments and, in this case, an advantageous position as the world’s largest maker of medicines and protective masks . . . On Wednesday, China said it would provide two million surgical masks, 200,000 advanced masks and 50,000 testing kits to Europe . . . One of China’s leading entrepreneurs, Jack Ma, offered to donate 500,000 tests and one million masks to the United States, where hospitals are facing shortages.

Some analysts argue that the coronavirus pandemic is accelerating the decoupling of the United States from China that began with President Trump’s trade war launched in 2018. American hawks see the pandemic as bolstering their argument that China’s dominance of certain global supply chains including some medical supplies and pharmaceutical ingredients poses a systemic risk to the American economy. Many others believe Trump’s “America First” not only damaged the country’s standing and its preparedness to deal with the pandemic, but also to create the international solidarity required for its containment and control.

In the words of one author in The Atlantic of March 15, 2020,

Like Japan in the mid-1800s, the United States now faces a crisis that disproves everything the country believes about itself . . . The United States, long accustomed to thinking of itself as the best, most efficient, and most technologically advanced society in the world, is about to be proved an unclothed emperor. When human life is in peril, we are not as good as Singapore, as South Korea, as Germany.

Some commentators even go further, contending that the pandemic is facilitating the process of de-globalisation more generally as countries not only lock themselves in national enclosures to protect themselves, but seek to become more economically self-sufficient. It is important to note that throughout history, there have been waves and retreats of globalisation. The globalisation of the late 19th century, which was characterised by massive migrations, growth of international trade, and expansion of global production chains with the emergence of modern multinational and transnational corporations, retreated in the inferno of World War I and the Great Depression.

The globalisation of the late 20th century, engendered by the emergence of new information and communication technologies and value chains, the rise of emerging economies as serious players in the world system, among other factors, had already started fraying by the time of the Great Recession. The latter pried open not only the deep inequalities that neo-liberal globalisation had engendered, but also gave vent to a crescendo of nationalist and populist backlashes.

Ironically, the coronavirus pandemic is also throwing into sharp relief the bankruptcy of populist nationalism. It underscores global interconnectedness, that pathogens do not respect our imaginary communities of nation-states, that the ties that bind humanity are thicker than the threads of separation.

Universities Go Online

The coronavirus pandemic has negatively impacted many industries and sectors, including education, following the closure of schools, colleges and universities. However, fear of crowding and lockdowns has also boosted online industries ranging from e-commerce and food delivery to online entertainment and gaming, to cloud solutions for business continuity, to e-health and e-learning.

The coronavirus pandemic is likely to leave a lasting impact on the growth of e-work or telecommuting, and other online-mediated business practices. Before the pandemic the gig economy was already a growing part of many economies, so were e-health and e-learning.

According to the British Guardian newspaper of March 6, 2020, General practitioners (GPs) have been “told to switch to digital consultations to combat Covid-19”. The story elaborates,

In a significant policy change, NHS bosses want England’s 7,000 GP surgeries to start conducting as many remote consultations as soon as possible, replacing patient visits with phone, video, online or text contact. They want to reduce the risk of someone infected with Covid-19 turning up at a surgery and free GPs to deal with the extra workload created by the virus . . . The approach could affect many of the 340m appointments a year with GPs and other practice staff, only 1% of which are currently carried out by video, such as Skype.

Another story in the same paper also notes that supermarkets in Britain have been “asked to boost deliveries for coronavirus self-isolation”.

The educational sector has been one of the most affected by the coronavirus pandemic as the closure of schools and universities has often been adopted by many governments as the first line of defense. It could be argued that higher education institutions have even taken the lead in managing the pandemic in three major ways: shifting instruction online, conducting research on the coronavirus and its multiple impacts, and advising public policy.

Ever since the crisis broke out, I’ve been following the multiple threats it poses to various sectors especially higher education, avidly devouring the academic media including The Chronicle of a higher Education, Inside Higher Education, University Business, Times Higher Education, and University World News, just to mention a few.

Ironically, the coronavirus pandemic is also throwing into sharp relief the bankruptcy of populist nationalism

These papers and magazines alerted me early, as a university administrator, to the need to develop early coronavirus planning in my own institution. A sample of the issues discussed in the numerous articles can be found in the following articles in The Chronicle of a higher Education (see textbox below).

Clearly, if these fifty articles from one higher education magazine are any guide, the higher education sector has been giving a lot of thought to the opportunities and challenges presented by the coronavirus pandemic. Some prognosticate that higher education will fundamentally change. An article in the The New York Times of March 18, 2020 hopes that “One positive outcome from the current crisis would be for academic elites to forgo their presumption that online learning is a second-rate or third-rate substitute for in-person delivery”. There will be some impact, but of course, only time will tell the scale of that impact.

Certainly, at my university we’ve learned invaluable lessons from the sudden switch to learning online using various platforms including Blackboard, our learning management system, Zoom, BlueJeans, Skype, not to mention email and social media such as WhatsApp. This experience is likely to be incorporated into the instructional pedagogies of our faculty.

But history also tells us that old systems often reassert themselves after a crisis, at the same time as they incorporate some changes brought by responses to the crisis. As the author of the article on “7 Takeaways” (see textbox below) puts it, “Many forces exerted pressure on the traditional four-year, bricks-and-mortar, face-to-face campus experience before the coronavirus, and they’ll still be there when the virus is conquered or goes dormant”.

It is likely that at many universities previously averse to online teaching and learning, online instructional tools and platforms will be incorporated more widely, creating a mosaic of face-to-face learning, blended learning, and online learning.

Whither the Future

Moments of profound crisis such as the one engendered by the coronavirus pandemic attract soothsayers and futurists. The American magazine, Politico, invited some three dozen thinkers to prognosticate on the long-term impact of the pandemic. They all offer intriguing reflections. For community life, some suggest the personal will become dangerous, a new kind of patriotism will emerge, polarisation will decline, faith in serious experts will return, there will be less individualism, changes in religious worship will occur, as well as the rise of new forms of reform.

The coronavirus pandemic is likely to leave a lasting impact on the growth of e-work or telecommuting, and other online-mediated business practices

As for technology, they suggest regulatory barriers to online tools will fall, healthier digital lifestyles will emerge, there will be a boon for virtual reality, the rise of telemedicine, provision of stronger medical care, government will become Big Pharma, and science will reign again. With reference to government, they predict Congress will finally go digital, big government will make a comeback, government service will regain its cachet, there will be a new civic federalism, revived trust in institutions, the rules we live by won’t all apply, and they urge us to expect a political uprising.

In terms of elections, they foresee electronic voting going mainstream, Election Day will become Election Month, and voting by mail will become the norm. For the global economy, they forecast that more restraints will be placed on mass consumption, stronger domestic supply chains will grow, and the inequality gap will widen. As for lifestyle, there will be a hunger for diversion, less communal dining, a revival of parks, a change in our understanding of “change”, and the tyranny of habit no more.

In truth, no one really knows for sure.

Textbox

- American Colleges Seek to Develop Coronavirus Response, Abroad and at Home, January 28, 2020. Focuses on limiting travel to China and preparing campus health facilities.

- Coronavirus Is Prompting Alarm on American Campuses. Anti-Asian Discrimination Could Do More Harm. February 5, 2020. Focuses curbing anti-Asian xenophobia and racism on campuses.

- How Much Could the Coronavirus Hurt Chinese Enrollments? February 20, 2020. Focuses on the possible impact of the coronavirus on Chinese enrollments the largest source of international students in American universities.

- Colleges Brace for More-Widespread Outbreak of Coronavirus, February 26, 2020. Focuses on universities assembling campuswide emergency-response committees, preparing communications plans, cautioning students to use preventive health measures, and even preparing for possible college closures.

- Colleges Pull Back From Italy and South Korea as Coronavirus Spreads. February 26, 2020. Self-explanatory.

- An Admissions Bet Goes Bust: For colleges that gambled on international enrollment, now what? March 1, 2020. Focuses on the dire financial implications of the collapse in the international student market because of the coronavirus crisis.

- The Coronavirus Is Upending Higher Ed. Here Are the Latest Developments. March 3, 2020. Focuses on universities increasingly moving classes online, asking students to leave campus, lobbying for stimulus package from government, imposing travel restrictions, and worrying about future enrollments.

- CDC Warns Colleges to ‘Consider’ Canceling Study-Abroad Trips. March 5, 2020. Self-explanatory.

- Enrollment Headaches From Coronavirus Are Many. They Won’t Be Relieved Soon. March 5, 2020. Focuses on the financial implications of declining prospects for the recruitment of international students.

- The Face of Face-Touching Research Says, ‘It’s Quite Frightening’. March 5, 2020. Highlights research on the difficulties for people not to touch their faces, one of the preventive guidelines against the coronavirus.

- U. of Washington Cancels In-Person Classes, Becoming First Major U.S. Institution to Do So Amid Coronavirus Fears. March 6, 2020. Self-explanatory.

- How Do You Quarantine for Coronavirus on a College Campus? March 6, 2020. Provides guidelines on who should be quarantined, what kind of housing should be provided for quarantined students, the supplies they need, and what to when students fall ill.

- As Coronavirus Spreads, the Decision to Move Classes Online Is the First Step. What Comes Next? March 6, 2020. Provides advice on making the transition to online classes.

- With Coronavirus Keeping Them in U.S., International Students Face Uncertainty. So Do Their Colleges. March 6, 2020. Provides guidelines on how to help with the travel, visa, financial and emotional needs of international students.

- Going Online in a Hurry: What to Do and Where to Start. March 9, 2020. Provides guidelines on how to prepare for course online assignments, assessment, examinations, course materials, instruction, and communication with students quickly.

- Will Coronavirus Cancel Your Conference? March 9, 2020. Self-explanatory.

- What ‘Middle’ Administrators Can Do to Help in the Coronavirus Crisis. March 10, 2020. Provides advice to middle managers in universities on how to community with their people, be more responsive and available than usual, convene their own crisis response teams, and keeping relevant campus authorities informed of major problems in your unit.

- Communicating With Parents Can Be Tricky — Especially When It Comes to Coronavirus. March 10, 2020. Provides advice on how to provide updates to parents some of who might oppose the closure of campus.

- Are Colleges Prepared to Move All of Their Classes Online? March 10, 2020. Notes that this is a huge experiment as many institutions, faculty members, and even students have little experience in online learning and provides some guidelines.

- Why Coronavirus Looks Like a ‘Black Swan’ Moment for Higher Ed. March 10, 2020. Offers reflections on the likely impact of the move to online teaching in terms of prompt universities to stop distinguishing between online and classroom programs.

- Teaching Remotely While Quarantined in China. A neophyte learns how to teach online. March, 11, 2020. A fascinating personal story by a faculty member of his experience with remote teaching while living under strict social isolation, which has gone better than he expected.

- When Coronavirus Closes Colleges, Some Students Lose Hot Meals, Health Care, and a Place to Sleep. March 11, 2020. On the various social hardships campus closures bring to some vulnerable students.

- How to Make Your Online Pivot Less Brutal. March 12, 2020. Offers advice that it’s OK to not know what you’re doing and seek help, keeping it as simple and accessible as you can, expect challenges and adjust.

- Preparing for Emergency Online Teaching. March 12, 2020. Provides resources guides for teaching online.

- Academe’s Coronavirus Shock Doctrine. March 12, 2020. Discusses the added pressures facing faculty because of the sudden conversion to online teaching.

- Shock, Fear, and Fatalism: As Coronavirus Prompts Colleges to Close, Students Grapple With Uncertainty. March 12, 2020. Reports how college students are reacting to campus closures with shock, uncertainty, sadness, and, in some cases, devil-may-care fatalism.

- As the Coronavirus Scrambles Colleges’ Finances, Leaders Hope for the Best and Plan for the Worst. March 12, 2020. Reflects on the likely disruptions on university finances from reduced enrollments and donations.

- What About the Health of Staff Members? March 13, 2020. Discusses how best to ensure staff continue to be healthy.

- As Coronavirus Drives Students From Campuses, What Happens to the Workers Who Feed Them? March 13, 2020. Discusses the challenges of maintaining non-essential staff on payroll during prolonged campus closure.

- 2020: The Year That Shredded the Admissions Calendar. March 15, 2020. Self-explanatory.

- How to Lead in a Crisis. March 16. Insightful advice from the former President of Tulane University during Hurricane Katrina.

- Colleges Emptied Dorms Amid Coronavirus Fears. What Can They Do About Off-Campus Housing? March 16, 2020. Reports on how some institutions have taken a more aggressive approach to limiting the spread of the virus in off-campus housing.

- How to Quickly (and Safely) Move a Lab Course Online. March 17, 2020. The author discusses his positive experiences to move a lab course quickly online and still meet his learning objectives through lab kits, virtual labs and simulations.

- University Labs Head to the Front Lines of Coronavirus Containment. March 17, 2020. Discusses how university medical centers have taken the lead in coronavirus research and due to the national shortage of testing kits used tests of their own design to begin screening patients.

- Hounded Out of U.S., Scientist Invents Fast Coronavirus Test in China. March 18, 2020. An intriguing story of how the US’s crackdown on scholars with ties to China has triggered a reverse brain drain of Chinese-American scholars to China inadvertently promoting China’s ambitious drive to attract top talent under its Thousand Talent program. It features a scholar and his team that are leading the race to develop coronavirus treatment.

- Coronavirus Crisis Underscores the Traits of a Resilient College. March 18, 2020. Discusses the qualities of resilient institutions including effective communication, management of cash flow, and investment in electronic infrastructure.

- Coronavirus Creates Challenges for Students Returning From Abroad. March 18, 2020. Self-explanatory.

- As Coronavirus Spreads, Universities Stall Their Research to Keep Human Subjects Safe. March 18, 2020. Self-explanatory.

- The Covid-19 Crisis Is Widening the Gap Between Secure and Insecure Instructors. March 18, 2020. Self-explanatory.

- Here’s Why More Colleges Are Extending Deposit Deadlines — and Why Some Aren’t. March 18, 2020. Discusses how some universities are changing their admission processes.

- How to Help Students Keep Learning Through a Disruption. March 18, 2020. Provides guidelines on how to keep students engaged in learning and support instructors throughout the crisis.

- As Classrooms Go Virtual, What About Campus-Leadership Searches? March 19, 2020. Discusses how senior university leadership searches are being affected and ways to handle the situation by reconsidering the steps, migrating to technology, and staying in touch with candidates.

- If Coronavirus Patients Overwhelm Hospitals, These Colleges Are Offering Their Dorms. March 19, 2020. Discusses how some universities are offering to donate their empty dorms for use by local hospitals.

- As Professors Scramble to Adjust to the Coronavirus Crisis, the Tenure Clock Still Ticks. March 19, 2020. Discusses how at many universities junior faculty remain under pressure to meet the tenure timelines despite the various institutional disruptions.

- ‘The Worst-Case Scenario’: What Financial Disclosures Tell Us About Coronavirus’s Strain on Colleges So Far. March 19, 2020. Reports the financial straights facing many universities and that Moody’s Investors Service issued a bleak forecast this week for American higher education.

- As the Coronavirus Forces Faculty Online, It’s ‘Like Drinking Out of a Firehose’. March 20, 2020. Recorded video interviews with four selected instructors by The Chronicle to collect their thoughts on how they are managing the sudden change.

- A Coronavirus Stimulus Plan Is Coming. How Will Higher Education Figure In? March 20, 2020. The article wonders how universities will fare under the massive stimulus package under negotiation in the US Congress. It notes “Nearly a dozen higher-education associations have also asked lawmakers for about $50 billion in federal assistance to help colleges and students stay afloat” and an additional $13 billion for research labs.

- Covid-19 Has Forced Higher Ed to Pivot to Online Learning. Here Are 7 Takeaways So Far. March 20, 2020. The takeaways include the fact that “What most colleges are doing right now is not online education,” “Many of the tools were already at hand,” “The pivot can be surprisingly cheap,” “This is your wake-up call,” The pandemic could change education delivery forever…”, “… but it probably won’t”

- ‘Nobody Signed Up for This’: One Professor’s Guidelines for an Interrupted Semester. March 20, 2020. An interesting account on how one faculty changed his syllabus and communicated with his students.

- The Coronavirus Has Pushed Courses Online. Professors Are Trying Hard to Keep Up. March 20, 2020. Makes many of the same observations noted above.