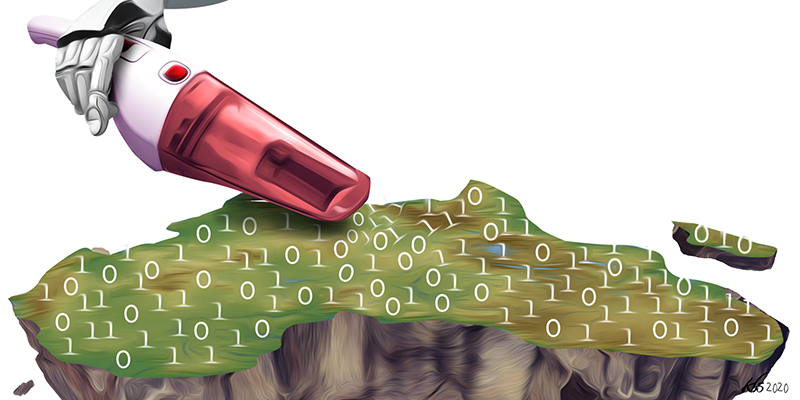

Traditional colonial power seeks unilateral power and domination over colonised people. It declares control of the social, economic, and political sphere by reordering and reinventing the social order in a manner that benefits it. In the age of algorithms, this control and domination occurs not through brute physical force but rather through invisible and nuanced mechanisms such as control of digital ecosystems and infrastructure.

Common to both traditional and algorithmic colonialism is the desire to dominate, monitor, and influence the social, political, and cultural discourse through the control of core communication and infrastructure mediums. While traditional colonialism is often spearheaded by political and government forces, digital colonialism is driven by corporate tech monopolies—both of which are in search of wealth accumulation.

The line between these forces is fuzzy as they intermesh and depend on one another. Political, economic, and ideological domination in the age of AI takes the form of “technological innovation”, “state-of-the-art algorithms”, and “AI solutions” to social problems. Algorithmic colonialism, driven by profit maximisation at any cost, assumes that the human soul, behaviour, and action is raw material free for the taking. Knowledge, authority, and power to sort, categorise, and order human activity rests with the technologist, for whom we are merely data-producing “human natural resources”, observes Shoshana Zuboff in her book, The Age of Surveillance Capitalism.

Zuboff remarks that “conquest patterns” unfold in three phases. First, the colonial power invents legal measures to provide justification for invasion. Then declarations of territorial claims are asserted. These declarations are then legitimised and institutionalised, as they serve as tools for conquering by imposing a new reality. These invaders do not seek permission as they build ecosystems of commerce, politics, and culture and declare legitimacy and inevitability. Conquests by declaration are invasive and sometimes serve as a subtle way to impose new facts on the social world and, for the declarers, they are a way to get others to agree with those facts.

Algorithmic colonialism, driven by profit maximisation at any cost, assumes that the human soul, behaviour, and action is raw material free for the taking

For technology monopolies, such processes allow them to take things that live outside the market sphere and declare them as new market commodities. In 2016, Facebook declared that it is creating a population density map of most of Africa using computer vision techniques, population data, and high-resolution satellite imagery. In the process, Facebook arrogated to itself the authority for mapping, controlling, and creating population knowledge of the continent.

In doing so, not only did Facebook assume that the continent (its people, movement, and activities) are up for grabs for the purpose of data extraction and profit maximisation, but Facebook also assumed authority over what is perceived as legitimate knowledge of the continent’s population. Statements such as “creating knowledge about Africa’s population distribution”, “connecting the unconnected”, and “providing humanitarian aid” served as justification for Facebook’s project. For many Africans this echoes old colonial rhetoric; “we know what these people need, and we are coming to save them. They should be grateful”.

Currently, much of Africa’s digital infrastructure and ecosystem is controlled and managed by Western monopoly powers such as Facebook, Google, Uber, and Netflix. These tech monopolies present such exploitations as efforts to “liberate the bottom billion”, help the “unbanked bank, or connect the “unconnected”—the same colonial tale but now under the guise of technology. “I find it hard to reconcile a group of American corporations, far removed from the realities of Africans, machinating a grand plan on how to save the unbanked women of Africa. Especially when you consider their recent history of data privacy breaches (Facebook) and worker exploitation (Uber)”, writes Michael Kimani. Nonetheless, algorithmic colonialism dressed in “technological solutions for the developing world” receives applause and rarely faces resistance and scrutiny.

It is important, however, to note that this is not a rejection of AI technology in general, or even of AI that is originally developed in the West, but a rejection of a particular business model advanced by big technology monopolies that impose particular harmful values and interests while stifling approaches that do not conform to their values. When practiced cautiously, access to quality data and use of various technological and AI developments does indeed hold potential for benefits to the African continent and the Global South in general. Access to quality data and secure infrastructure to share and store data, for example, can help improve the healthcare and education sectors.

Gender inequalities which plague every social, political, and economic sphere in Ethiopia, for instance, have yet to be exposed and mapped through data. Such data is invaluable in informing long-term gender-balanced decision making which is an important first step towards making societal and structural changes. Such data also aids general societal-level awareness of gender disparities, which is central for grassroots change. Crucial issues across the continent surrounding healthcare and farming, for example, can be better understood and better solutions can be sought with the aid of locally developed technology. A primary example is a machine learning model that can diagnose early stages of disease in the cassava plant, which is developed by Charity Wayua, a Kenyan researcher and her team

Having said that, the marvelousness of technology and its benefits to the continent is not what this paper has set out to discuss. There already exist countless die-hard techno-enthusiasts, both within and outside the continent, some of whom are only too willing to blindly adopt anything “data-driven” or AI-based without a second thought to the possible harmful consequences. Mentions of “technology”, “innovation”, and “AI” continually and consistently bring with them evangelical advocacy, blind trust, and little, if any, critical engagement. They also bring with them invested parties that seek to monetise, quantify, and capitalise every aspect of human life, often at any cost.

Crucial issues across the continent surrounding healthcare and farming can be better understood and better solutions can be sought with the aid of locally developed technology

The atmosphere during a major technology conference in Tangier, Morocco in 2019 embodies this techevangelism. CyFyAfrica, The Conference on Technology, Innovation and Society, is one of Africa’s biggest annual conferences attended by various policy makers, UN delegates, ministers, governments, diplomats, media, tech corporations, and academics from over 65 (mostly African and Asian) nations.

Although these leaders want to place “the voice of the youth of Africa at the front and centre”, the atmosphere was one that can be summed up as a race to get the continent “teched-up”. Efforts to implement the latest, state-of-the-art machine learning tool or the next cutting-edge application were applauded and admired while the few voices that attempted to bring forth discussions of the harms that might emerge with such technology got buried under the excitement. Given that the technological future of the continent is overwhelmingly driven and dominated by such techno-optimists, it is crucial to pay attention to the cautions that need to be taken and the lessons that need to be learned from other parts of the world.

Context matters

One of the central questions that need attention in this regard is the relevance and appropriateness of AI software developed with values, norms, and interests of Western societies to users across the African continent. Value systems vary from culture to culture including what is considered a vital problem and a successful solution, what constitutes sensitive personal information, and opinions on prevalent health and socio-economic issues. Certain matters that are considered critical problems in some societies may not be considered so in other societies. Solutions devised in a one culture may not transfer well to another. In fact, the very problems that the solution is set out to solve may not be considered problems for other cultures.

The harmful consequences of lack of awareness to context is most stark in the health sector. In a comparative study that examined early breast cancer detection practices between Sub-Saharan Africa (SSA) and high-income countries, Eleanor Black and Robyn Richmond found that applying what has been “successful” in the West, i.e. mammograms, to SSA is not effective in reducing mortality from breast cancer. A combination of contextual factors, such as a lower age profile, presentation with advanced disease, and limited available treatment options all suggest that self-examination and clinical breast examination for early detection methods serve women in SSA better than medical practice designed for their counterparts in high-income countries. Throughout the continent, healthcare is one of the major areas where “AI solutions” are actively sought and Western-developed technological tools are imported. Without critical assessment of their relevance, the deployment of Western eHealth systems might bring more harm than benefit.

Mentions of “technology”, “innovation”, and “AI” continually and consistently bring with them evangelical advocacy, blind trust, and little, if any, critical engagement

The importing of AI tools made in the West by Western technologists may not only be irrelevant and harmful due to lack of transferability from one context to another but is also an obstacle that hinders the development of local products. For example, “Nigeria, one of the more technically developed countries in Africa, imports 90% of all software used in the country. The local production of software is reduced to add-ons or extensions creation for mainstream packaged software”. The West’s algorithmic invasion simultaneously impoverishes development of local products while also leaving the continent dependent on its software and infrastructure.

Data are people

The African equivalents of Silicon Valley’s tech start-ups can be found in every possible sphere of life around all corners of the continent—in “Sheba Valley” in Addis Abeba, “Yabacon Valley” in Lagos, and “Silicon Savannah” in Nairobi, to name a few—all pursuing “cutting-edge innovations” in sectors like banking, finance, healthcare, and education. They are headed by technologists and those in finance from both within and outside the continent who seemingly want to “solve” society’s problems, using data and AI to provide quick “solutions”.

As a result, the attempt to “solve” social problems with technology is exactly where problems arise. Complex cultural, moral, and political problems that are inherently embedded in history and context are reduced to problems that can be measured and quantified—matters that can be “fixed” with the latest algorithm. As dynamic and interactive human activities and processes are automated, they are inherently simplified to the engineers’ and tech corporations’ subjective notions of what they mean. The reduction of complex social problems to a matter that can be “solved” by technology also treats people as passive objects for manipulation. Humans, however, far from being passive objects, are active meaning-seekers embedded in dynamic social, cultural, and historical backgrounds.

The discourse around “data mining”, “abundance of data”, and “data-rich continent” shows the extent to which the individual behind each data point is disregarded. This muting of the individual—a person with fears, emotions, dreams, and hopes—is symptomatic of how little attention is given to matters such as people’s well-being and consent, which should be the primary concerns if the goal is indeed to “help” those in need. Furthermore, this discourse of “mining” people for data is reminiscent of the coloniser’s attitude that declares humans as raw material free for the taking.

Complex cultural, moral, and political problems that are inherently embedded in history and context are reduced to problems that can be measured and quantified

Data is necessarily always about something and never about an abstract entity. The collection, analysis, and manipulation of data potentially entails monitoring, tracking, and surveilling people. This necessarily impacts people directly or indirectly whether it manifests as change in their insurance premiums or refusal of services. The erasure of the person behind each data point makes it easy to “manipulate behavior” or “nudge” users, often towards profitable outcomes for companies. Considerations around the wellbeing and welfare of the individual user, the long-term social impacts, and the unintended consequences of these systems on society’s most vulnerable are pushed aside, if they enter the equation at all.

For companies that develop and deploy AI, at the top of the agenda is the collection of more data to develop profitable AI systems rather than the welfare of individual people or communities. This is most evident in the FinTech sector, one of the prominent digital markets in Africa. People’s digital footprints, from their interactions with others to how much they spend on their mobile top ups, are continually surveyed and monitored to form data for making loan assessments. Smartphone data from browsing history, likes, and locations is recorded forming the basis for a borrower’s creditworthiness.

Artificial Intelligence technologies that aid decision-making in the social sphere are, for the most part, developed and implemented by the private sector whose primary aim is to maximise profit. Protecting individual privacy rights and cultivating a fair society is therefore the least of their concerns, especially if such practice gets in the way of “mining” data, building predictive models, and pushing products to customers. As decision-making of social outcomes is handed over to predictive systems developed by profit-driven corporates, not only are we allowing our social concerns to be dictated by corporate incentives, we are also allowing moral questions to be dictated by corporate interest.

“Digital nudges”, behaviour modifications developed to suit commercial interests, are a prime example. As “nudging” mechanisms become the norm for “correcting” individuals’ behaviour, eating habits, or exercise routines, those developing predictive models are bestowed with the power to decide what “correct” is. In the process, individuals that do not fit our stereotypical ideas of a “fit body”, “good health”, and “good eating habits” end up being punished, outcast, and pushed further to the margins. When these models are imported as state-of-the-art technology that will save money and “leapfrog” the continent into development, Western values and ideals are enforced, either deliberately or intentionally.

Blind trust in AI hurts the most vulnerable

The use of technology within the social sphere often, intentionally, or accidentally, focuses on punitive practices, whether it is to predict who will commit the next crime or who may fail to repay their loan. Constructive and rehabilitative questions such as why people commit crimes in the first place or what can be done to rehabilitate and support those that have come out of prison are rarely asked. Technology designed and applied with the aim of delivering security and order necessarily brings cruel, discriminatory, and inhumane practices to some.

The cruel treatment of the Uighurs in China and the unfair disadvantaging of the poor are examples in this regard. Similarly, as cities like Harare, Kampala, and Johannesburg introduce the use of facial recognition technology, the question of their accuracy (given they are trained on unrepresentative demographic datasets) and relevance should be of primary concern—not to mention the erosion of privacy and the surveillance state that emerges with these technologies.

Not only are we allowing our social concerns to be dictated by corporate incentives, we are also allowing moral questions to be dictated by corporate interest

With the automation of the social comes the automation and perpetuation of historical bias, discrimination, and injustice. As technological solutions are increasingly deployed and integrated into the social, economic, and political spheres, so are the problems that arise with the digitisation and automation of everyday life. Consequently, the harmful effects of digitisation and “technological solutions” affect individuals and communities that are already at the margins of society. For example, as Kenya embarks on the project of national biometric IDs for its citizens, it risks excluding racial, ethnic, and religious minorities that have historically been discriminated.

Enrolling on the national biometric ID requires documents such as a national ID card and birth certificate. However, these minorities have historically faced challenges acquiring such documents. If the national biometric system comes into effect, these minority groups may be rendered stateless and face challenges registering a business, getting a job, or travelling. Furthermore, sensitive information about individuals is extracted which raises questions such as where this information will be stored, how it will be used, and who has access.

FinTech and the digitisation of lending have come to dominate the “Africa rising” narrative, a narrative which supposedly will “lift many out of poverty”. Since its arrival in the continent in the 1990s, FinTech has largely been portrayed as a technological revolution that will “leap-frog” Africa into development. The typical narrative preaches the microfinance industry as a service that exists to accommodate the underserved and a system that creates opportunities for the “unbanked” who have no access to a formal banking system. Through its microcredit system, the narrative goes, Africans living in poverty can borrow money to establish and expand their microenterprise ventures.

However, a closer critical look reveals that the very idea of FinTech microfinancing is a reincarnation of colonialist-era rhetoric that works for Western multinational shareholders. These stakeholders get wealthier by leaving Africa’s poor communities in perpetual debt. In Milford Bateman’s words: “like the colonial era mining operations that exploited Africa’s mineral wealth, the microcredit industry in Africa essentially exists today for no other reason than to extract value from the poorest communities”.

Far from being a tool that “lifts many out of poverty”, FinTech is a capitalist market premised upon the profitability of the perpetual debt of the poorest. For instance, although Safaricom is 35% owned by the Kenyan government, 40% of the shares are controlled by Vodafone—a UK multinational corporation—while the other 25%, are held mainly by wealthy foreign investors. According to Nicholas Loubere, Safaricom reported an annual profit of $US 620 million in 2019, which was directed into dividend payments for investors.

A closer critical look reveals that the very idea of FinTech microfinancing is a reincarnation of colonialist-era rhetoric that works for Western multinational shareholders

Like traditional colonialism, wealthy individuals and corporations in the Global North continue to profit from some of the poorest communities except that now it takes place under the guise of “revolutionary” and “state-of-the-art” technology. Despite the common discourse of paving a way out of poverty, FinTech actually profits from poverty. It is an endeavour engaged in the expansion of its financial empire through indebting Africa’s poorest.

Loose regulations and lack of transparency and accountability under which the microfinance industry operates, as well as overhyping the promise of technology, makes it difficult to challenge and interrogate its harmful impacts. Like traditional colonialism, those that benefit from FinTech, microfinancing, and from various lending apps operate from a distance. For example, Branch and Tala, two of the most prominent FinTech apps in Kenya, operate from their California headquarters and export “Silicon Valley’s curious nexus of technology, finance, and developmentalism”. Furthermore, the expansion of Western-led digital financing systems brings with it a negative knock-on effect on existing local traditional banking and borrowing systems that have long existed and functioned in harmony with locally established norms and mutual compassion.

Lessons from the Global North

Globally, there is an increasing awareness of the problems that arise with automating social affairs illustrated by ongoing attempts to integrate ethics into computer science programmes within academia, various “ethics boards” within industry, as well as various proposed policy guidelines. These approaches to develop, implement, and teach responsible and ethical AI take multiple forms, perspectives, directions, and present a plurality of views.

This plurality is not a weakness but rather a desirable strength which is necessary for accommodating a healthy, context-dependent remedy. Insisting on a single AI integration framework for ethical, social, and economic issues that arise in various contexts and cultures is not only unattainable but also imposes a one-size-fits-all, single worldview.

Despite the common discourse of paving a way out of poverty, FinTech actually profits from poverty

Companies like Facebook which enter African “markets” or embark on projects such as creating population density maps with little or no regard for local norms or cultures are in danger of enforcing a one-size-fits-all imperative. Similarly, for African developers, start-ups, and policy makers working to solve local problems with homegrown solutions, what is considered ethical and responsible needs to be seen as inherently tied to local contexts and experts.

Artificial Intelligence, like Big Data, is a buzzword that gets thrown around carelessly; what it refers to is notoriously contested across various disciplines, and oftentimes it is mere mathematical snake oil that rides on overhype. Researchers within the field, reporters in the media, and industries that benefit from it, all contribute to the overhype and exaggeration of the capabilities of AI. This makes it extremely difficult to challenge the deeply engrained attitude that “all Africa is lacking is data and AI”. The sheer enthusiasm with which data and AI are subscribed to as gateways out of poverty or disease would make one think that any social, economic, educational, and cultural problems are immutable unless Africa imports state-of-the-art technology.

The continent would do well to adopt a dose of critical appraisal when regulating, deploying, and reporting AI. This requires challenging the mindset that invests AI with God-like power and as something that exists independent of those that create it. People create, control, and are responsible for any system. For the most part, such people are a homogeneous group of predominantly white, middle-class males from the Global North. Like any other tool, AI is one that reflects human inconsistencies, limitations, biases, and the political and emotional desires of the individuals behind it, and the social and cultural ecology that embed it. Just like a mirror, it reflects how society operates—unjust and prejudiced against some individuals and communities.

Artificial Intelligence tools that are deployed in various spheres are often presented as objective and value-free. In fact, some automated systems which are put forward in domains such as hiring and policing are put forward with the explicit claim that these tools eliminate human bias. Automated systems, after all, apply the same rules to everybody. Such a claim is in fact one of the single most erroneous and harmful misconceptions as far as automated systems are concerned. As Cathy O’Neil explains, “algorithms are opinions embedded in code”. This widespread misconception further prevents individuals from asking questions and demanding explanations. How we see the world and how we choose to represent the world is reflected in the algorithmic models of the world that we build. The tools we build necessarily embed, reflect, and perpetuate socially and culturally held stereotypes and unquestioned assumptions.

For example, during the CyFyAfrica 2019 conference, the Head of Mission, UN Security Council Counter-Terrorism Committee Executive Directorate, addressed work that is being developed globally to combat online counterterrorism. Unfortunately, the Director focused explicitly on Islamic groups, portraying an unrealistic and harmful image of global online terrorism. For instance, contrary to such portrayal, more than 60 per cent of mass shootings in the United States in 2019 were carried out by white-nationalist extremists. In fact, white supremacist terrorists carried out more attacks than any other type of group in recent years in the US.

In Johannesburg, one of the most surveilled cities in Africa, “smart” CCTV networks provide a powerful tool to segregate, monitor, categorise, and punish individuals and communities that have historically been disadvantaged. Vumacam, an AI-powered surveillance company, is fast expanding throughout South Africa, normalising surveillance and erecting apartheid-era segregation and punishment under the guise of “neutral” technology and security. Vumacam currently provides a privately owned video-management-as-a-service infrastructure, with a centralised repository of video data from CCTV. Kwet explains that in the apartheid era passbooks served as a means of segregating the population, inflicting mass violence, and incarcerating the black communities.

Similarly, “[s]mart surveillance solutions like Vumacam are explicitly built for profiling, and threaten to exacerbate these kinds of incidents”. Although the company claims its technology is neutral and unbiased, what it deems “abnormal” and “suspicious” behaviour disproportionally constitutes those that have historically been oppressed. What the Vumacam software flags as “unusual behaviour” tends to be dominated by the black demographic and most commonly those that do manual labour such as construction workers. According to Andy Clarno, “[t]he criminal in South Africa is always imagined as a black male”. Despite its claim to neutrality, Vumacam software perpetuates this harmful stereotype.

Stereotypically held views drive what is perceived as a problem and the types of technology we develop to “resolve” them. In the process we amplify and perpetuate those harmful stereotypes. We then interpret the findings through the looking-glass of technology as evidence that confirms our biased intuitions and further reinforces stereotypes. Any classification, clustering, or discrimination of human behaviours and characteristics that AI systems produce reflects socially and culturally held stereotypes, not an objective truth.

A robust body of research in the growing field of Algorithmic Injustice illustrates that various applications of algorithmic decision-making result in biased and discriminatory outcomes. These discriminatory outcomes often affect individuals and groups that are already at the margins of society, those that are viewed as deviants and outliers—people who do not conform to the status quo. Given that the most vulnerable are affected by technology disproportionally, it is important that their voices are central in any design and implementation of any technology that is used on or around them.

However, on the contrary, many of the ethical principles applied to AI are firmly utilitarian; the underlying principle is the best outcome for the greatest number of people. This, by definition, means that solutions that centre minorities are never sought. Even when unfairness and discrimination in algorithmic decision-making processes are brought to the fore—for instance, upon discovering that women have been systematically excluded from entering the tech industry, minorities forced into inhumane treatment, and systematic biases have been embedded into predictive policing systems—the “solutions” sought do not often centre those that are disproportionally impacted. Mitigating proposals devised by corporate and academic ethics boards are often developed without the consultation and involvement of the people that are affected.

Stereotypically held views drive what is perceived as a problem and the types of technology we develop to “resolve” them

Prioritising the voice of those that are disproportionally impacted every step of the way, including in the design, development, and implementation of any technology, as well as in policymaking, requires actually consulting and involving vulnerable groups of society. This, of course, requires a considerable amount of time, money, effort, and genuine care for the welfare of the marginalised, which often goes against most corporates’ business models. Consulting those who are potentially likely to be negatively impacted might (at least as far as the West’s Silicon Valley is concerned) also seem beneath the “all knowing” engineers who seek to unilaterally provide a “technical fix” for any complex social problem.

As Africa grapples with digitising and automating various services and activities and protecting the consequential harm that technology causes, policy makers, governments, and firms that develop and apply various technologies to the social sphere need to think long and hard about what kind of society we want and what kind of society technology drives. Protecting and respecting the rights, freedoms, and privacy of the very youth that the leaders want to put at the front and centre should be prioritised. This can only happen if guidelines and safeguards for individual rights and freedoms are put in place, continually maintained, revised, and enforced. In the spirit of communal values that unifies such a diverse continent, “harnessing” technology to drive development means prioritising the welfare of the most vulnerable in society and the benefit of local communities, not distant Western start-ups or tech monopolies.

The question of technologisation and digitalisation of the continent is also a question of what kind of society we want to live in. The continent has plenty of techno-utopians but few that would stop and ask difficult and critical questions. African youth solving their own problems means deciding what we want to amplify and showing the rest of the world; shifting the tired portrayal of the continent (hunger and disease) by focusing attention on the positive vibrant culture (such as philosophy, art, and music) that the continent has to offer. It also means not importing the latest state-of-the-art machine learning systems or some other AI tools without questioning the underlying purpose and contextual relevance, who benefits from it, and who might be disadvantaged by the application of such tools. Moreover, African youth involvement in the AI field means creating programmes and databases that serve various local communities and not blindly importing Western AI systems founded upon individualistic and capitalist drives. In a continent where much of the Western narrative is hindered by negative images such as migration, drought, and poverty, using AI to solve our problems ourselves starts with a rejection of such stereotypical images. This means using AI as a tool that aids us in portraying how we want to be understood and perceived; a continent where community values triumph and nobody is left behind.

–

This article was first published by SCRIPTed.